Introducing the Kafka Producer Proxy

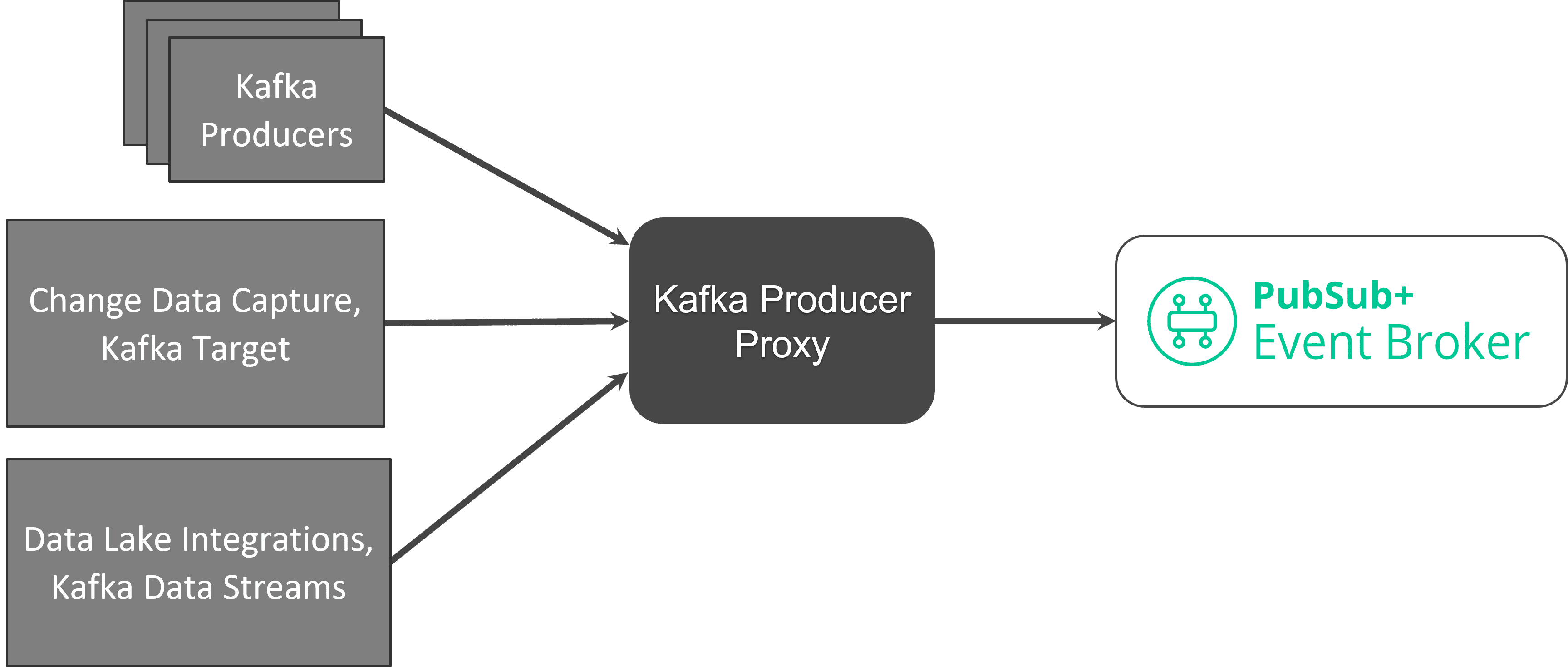

Heads up to those of you building and integrating with Kafka publishing solutions! If you are using or expanding an event mesh with Solace PubSub+ Platform and have existing Kafka publisher applications or 3rd-party products that publish data into Kafka, such as change data capture (CDC) products like Qlik, Debezium, GoldenGate, or other packaged event sources, then read on.

Solace PubSub+ brokers enable event streaming across cloud and on-premises environments. To complement existing Kafka integration options, such as customizable source and sink connector framework and an integrated Kafka bridge, we’ve now made available an open-source Kafka producer proxy.

This exceptionally useful open-source utility proxies a Kafka producer connection to a Solace broker by doing protocol translation. The proxy accepts incoming connection requests from Kafka producer clients, and when producing a record to Kafka, the proxy will automatically convert and forward a message onto a Solace broker — without any code changes on the Kafka producer client, and without the need to run a Kafka cluster! The proxy effectively is the Kafka “broker.”

Messages are sent onto Solace using Guaranteed (persistent) delivery. The topic and payload are copied over, and the partition key – if specified – is added as a Base64-encoded User Property header called JMSXGroupID that can be accessed by consuming Solace, AMQP, and MQTT applications. This allows for interoperability with Solace’s partitioned queues feature, which was recently released in April 2023. Note that the key is Base64-encoded as a Kafka key can be any Object, not just a String.

There is also an option to automatically convert any separator characters in the Kafka topic to the Solace multi-level topic separator: “/”. For example: flights.us.pos Kafka topic would get translated to flights/us/pos in Solace.

Obviously, the best way to leverage the full power of PubSub+ brokers is to use a more standardized JMS connection, or to recode the app to use a native Solace API as the publish-subscribe I/O interface, but this proxy provides an easy way to leverage a distributed event mesh without making any code changes to the publisher. It can be a useful testing tool for publishing existing event streams directly to Solace PubSub+.

Once the proxy is running, all the producer client needs to do is change the connection parameters to point at the host and port where the proxy is listening. The proxy currently requires SASL authentication. More details are available at this GitHub page.

If you want to see it in action, I recently published a video demonstrating how to clone, run, and use the Proxy:

If you feel like getting your hands dirty, the code is open-source and easy to modify and extend for your specific use case. For example, if you know the payload format that you’re sending (e.g. JSON) and the schema, writing a small unmarshaller class to extract important portions of the payload and build a more dynamic, descriptive Solace topic wouldn’t be too hard.

A Bit about Solace Topics

If you don’t know much about Solace topics, they are far more flexible than Kafka topics. You can learn more details comparing the two in my video here.

- Kafka topics are essentially log files, partitioned for performance and scalability; they are coarse-grained, heavy-weight file objects that reside inside the Kafka broker. For example:

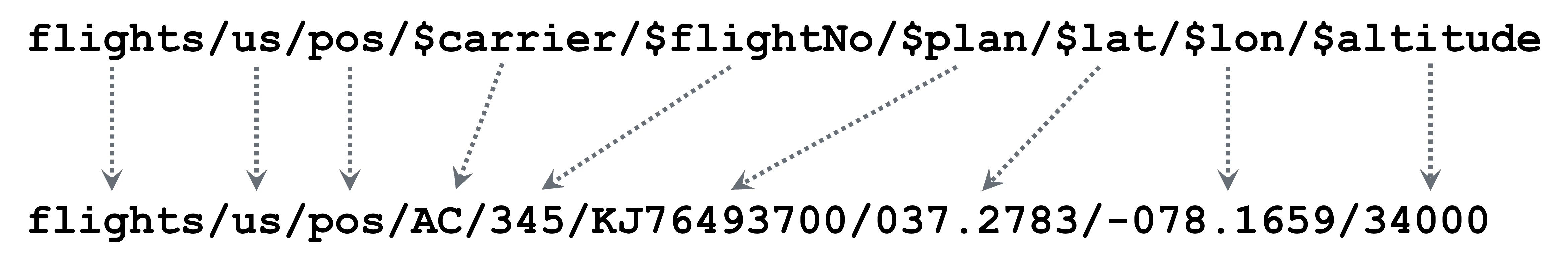

flights.us.posorflights.us.pos.AC - Solace topics are fine-grained, dynamic metadata attached to each message; they are multi-level (levels separated by a “/”) and each level typically describes a piece of data within the message payload. They are ephemeral, and can be unique for each published message. For example, this topic string is much more variable, and descriptive:

Solace subscribers also have the benefit of being able to use wildcards at any topic level. More on this later.

This level of granularity is not possible with Kafka topics as each unique topic is a different log file on the Kafka cluster. In Solace, the topic defines the message contents, and a matching is done against subscriptions in the broker to determine which consuming apps should receive.

Suppose you wanted to build a system where 1000s of downstream consumers wanted to receive flight location updates for just one particular flight. In Kafka, with a single “catch-all” topic, each app would have to consume every message within the topic, and filter within the client for the particular flight number. This would obviously waste a huge amount of bandwidth and computing power.

You may argue that you could also have a Kafka topic–per–flight-number, but that could be many-thousands of Kafka topics (just for flight location data), and that scale can be difficult to work with. Every Kafka topic is some number of open file handles (based on partition number) residing on the Kafka broker server, and service providers often charge per topic and/or partition. This design also means that you lose global ordering of events, as they are spread across many different topic files, which must all be read in parallel.

Solace’s advanced topic filtering with wildcards allows the broker to filter on just the levels/metadata that your consumer application finds interesting, sending each consumer exactly what they want. For example, to subscribe to any events about all Air Canada flights in the US at 34,000 feet of altitude you could insert an asterisk wildcard for flight number, plan, latitude and longitude like this: flights/us/pos/AC/*/*/*/*/34000. Or you can indicate interest in events about the position of any US flight by inserting a greater than symbol after flights/us/pos/>. You can learn all about wildcards here.

Try it Yourself

I encourage you to download the proxy and give it a try. Updates will be made occasionally as newer versions of Kafka and Solace are released, and to add new features. Let us know what you think, especially if you find a use case that this really helps with, or a publisher app that has issues using the proxy, over on Solace Community.

The post Introducing the Kafka Producer Proxy appeared first on Solace.